Analyzing Faces

Using basic facial-recognition technology, the researchers weeded through 130,741 public photos of men and women posted on a U.S. dating website, selecting for images that showed a single face large and clear enough to analyze. This left a pool of 35,326 pictures of 14,776 individuals. Gay and straight people, male and female, were represented evenly.

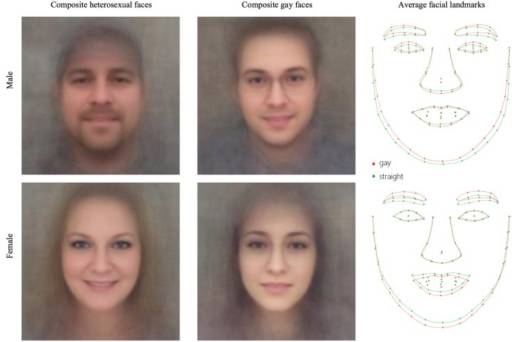

Software called VGG-Face analyzed the faces and looked for correlations between a person's face (nose length, jaw width, etc.) and their self-declared sexual identity on the website. Using a resulting model made up of these distinguishing characteristics, the program, when shown one photo of a gay man and one of a straight man, was able to identify their sexual orientation 81 percent of the time. For women, the success rate was 71 percent. (Accuracy increased when the model was shown more than one image of a person.) Human guessers correctly identified straight faces and gay faces just 61 percent of the time for men and 54 percent for women.

The researchers say in the paper that these results "provide strong support" for the prenatal hormone theory of gay and lesbian sexual orientation. The theory holds that under or overexposure to prenatal androgens are a key determinant of sexual orientation.

In an authors' note (last updated Sept. 13), the researchers discuss the study's limitations at some length, including the narrow demographic characteristics of the individuals analyzed -- white people who self-reported to be gay or straight. They also expressed concerns about the implications of the study:

We were really disturbed by these results and spent much time considering whether they should be made public at all. We did not want to enable the very risks that we are warning against.

Recent press reports, however, suggest that governments and corporations are already using tools aimed at revealing intimate traits from faces. Facial images of billions of people are stockpiled in digital and traditional archives, including dating platforms, photo-sharing websites, and government databases. Profile pictures on Facebook, LinkedIn, and Google Plus are public by default. CCTV cameras and smartphones can be used to take pictures of others’ faces without their permission.

Critics of the research expressed concerns that it will lead to the very invasion of privacy the authors seek to warn against. HRC/GLAAD also criticized the limited demographic pool used by the researchers, the "superficial" nature of the characteristics analyzed in the model, and the way media have represented the study.

The authors responded angrily, calling the HRC/GLAAD press release premature and misleading. "They do a great disservice to the LGBTQ community by dismissing our results outright without properly assessing the science behind it, and hurt the mission of the great organizations that they represent," they wrote.

The researchers also stressed, in their authors' note, that they did not invent the tools used. Rather, they applied internet-available software to internet-available data, with the goal of demonstrating the privacy risks inherent in artificially intelligent technologies.

"We studied existing technologies," wrote Kosinski and Wang, already widely used by companies and governments, to see whether they present a risk to the privacy of LGBTQ individuals."

They added, "We were terrified to find that they do."

Such tools present a special threat, said the authors, to the privacy and safety of gay men and women living under repressive regimes where homosexuality is illegal.

But other LGBT academics and writers did not accept this line of reasoning. Oberlin sociology professor Greggor Mattson wrote a takedown, published on his website, describing the study as "much less insightful than the researchers claim." The authors' discussion of their ethical concerns suffered from "stunning tone-deafness," Mattson wrote.

At least one LGBT blogger, though, came to the researchers' defense.

Alex Bollinger, writing at LGBTQ Nation, wrote a post titled "HRC and GLAAD release a silly statement about the ‘gay face’ study."

"We should take a stance of curiosity instead of judgment.

"This is just one study that looked at one sample and said a few things. There will be more studies later on that will say other things. Let’s see how that all unfolds before deciding what the correct answer is."

This post was edited Oct. 9 to specify the nature of the American Psychological Association's review of the study.